Model Mania: Why so many models?

Looking into the "why" on a blitz of recent model announcements

Do we need more models?

With robust and rapidly evolving model updates from extremely well funded startups like OpenAI, Anthropic, Cohere already in the market, as well as offerings like Bard from Google, it’s worth taking a step back to understand why the introduction of an open source model like Llama 2 is making such a splash in the online conversation.

First, we should state the obvious that we sometimes forget: Despite the rapid improvements and commercialization of LLM models, there’s still some critical issues blocking or limiting success some important use cases.

The big outstanding issues:

These aren’t the only outstanding issues, but ones that are driving the continued diversification of model sources and formats.

Private data in models

There’s a lot of reasons why people want to add private data into models, but the big three are:

Lack of recent data in models (something that likely won’t change given high cost of training)

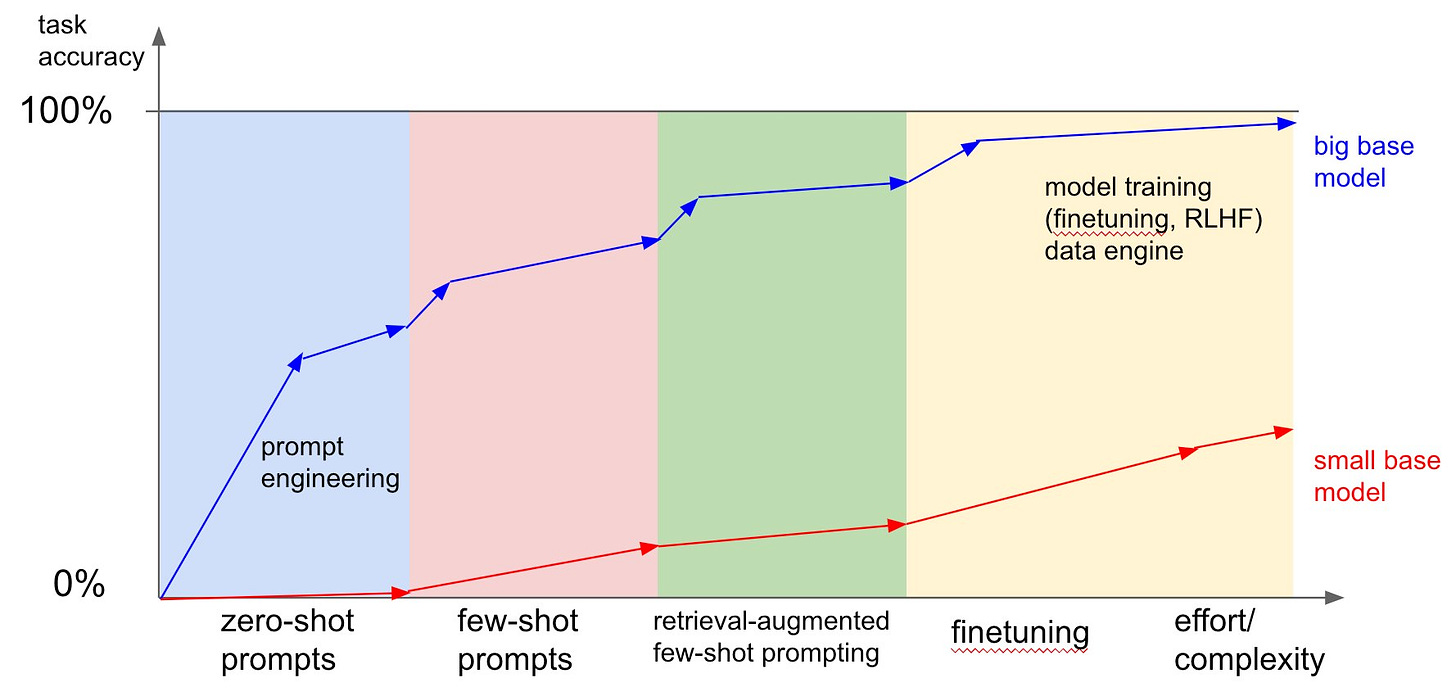

Seeking a competitive edge through improving accuracy or use case coverage using owned data. While techniques are evolving to insert knowledge at execution, the top level of performance (critical for some use cases, meaningless for others) will likely still be achieved through additional training specific to the use case. Though it’s important to note that this comes at significant cost and we usually advise our clients to work their way up the accuracy threshold and invest where small marginal performance improvement is meaningful to impact.

Sensitive or restricted data. Some use cases may require data that is sensitive or restricted in use that cannot be shared with a cloud provider. These use cases will require dedicated infrastructure, controls and

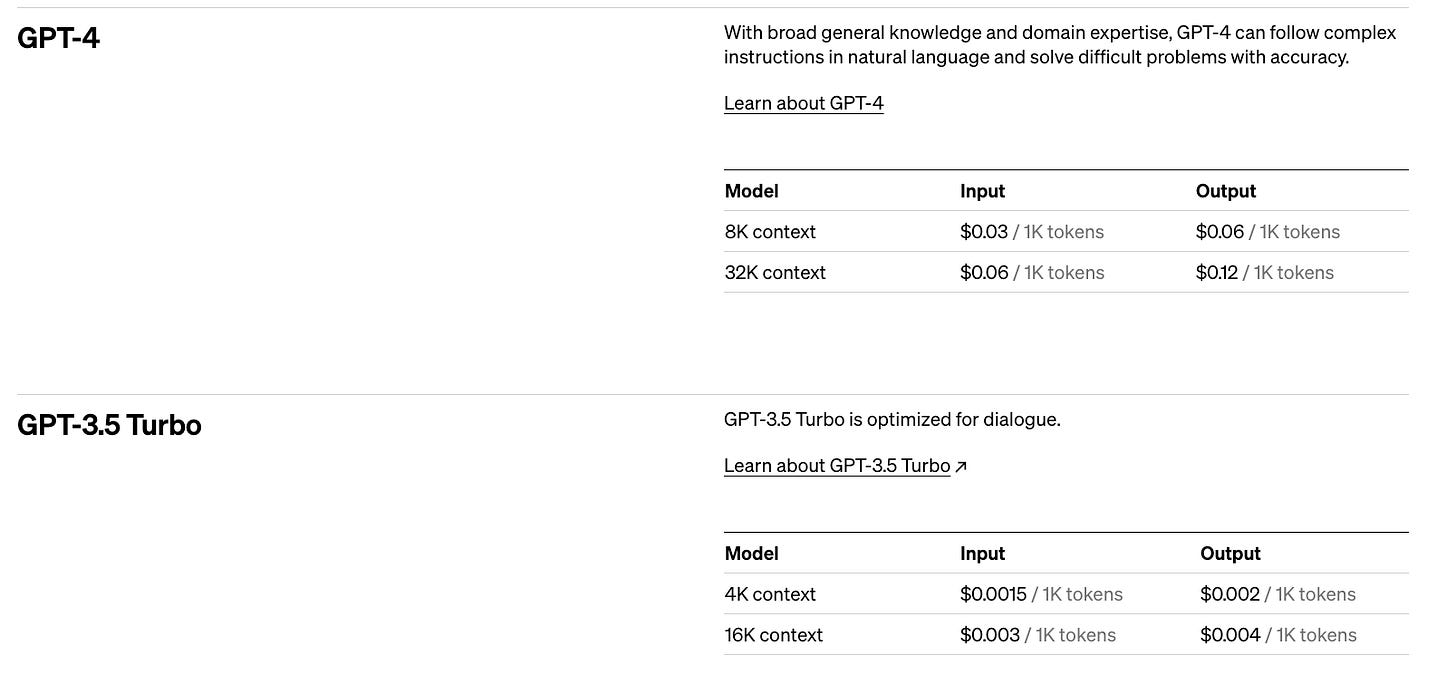

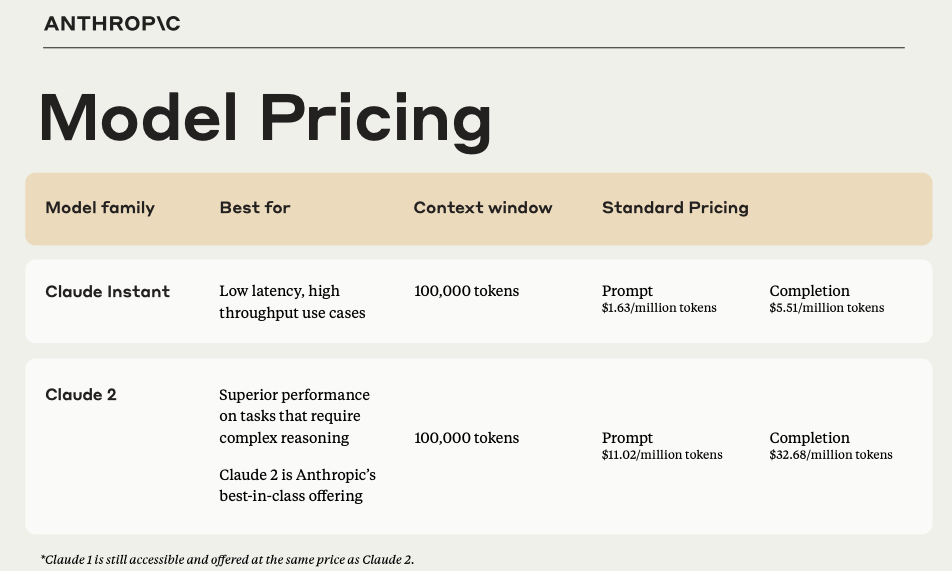

Linear price models

While the pay per consumption/production models have democratized AI availability, they aren’t always beneficial for the top tier of consumers.

Observability of data inputs

Another big rumbling behind the scenes is around the fair use of inputs to create the commercial models. Recent details have come out on the FTC probe of OpenAI (NY Times) creating doubt about the legality of use of publicly available website data in training models.

This twitter thread does a good job recapping the particulars of the FTC query:

Lack of Performance Control

I am somewhat hesitant to add this one since there is a lot of politics behind the scenes but i’ll share along with the reasons to be somewhat skeptical. Last week, Databricks published a research study that GPT4 performance had degraded significantly since March initial release (Study). The media primarily focused on GPT4 degradation, but the researchers do also showcase that GPT3.5 has massively improved over the same timeframe. The key takeaway (as noted by the researchers as well) is that model performance in actively updated models may vary and monitoring may be needed. The reality for large companies with products based on these models though is that monitoring is not highly valuable without a means to correct degradation.

A couple considerations in interpreting these results:

Databricks, the study sponsor, agreed to acquire MosiacML last month for $1.3bln. MosiacML is a company focused on making it easier to create and modify your own LLMs rather than use an OOTB model like GPT4

OpenAI does offer “snapshot” models which do not update over time like the one listed on their website. These currently sustain for 3 months, but could be held for longer duration in the future

gpt-4-0613 -Snapshot of

gpt-4from June 13th 2023 with function calling data. Unlikegpt-4, this model will not receive updates, and will be deprecated 3 months after a new version is released.

GPT4 currently makes up a paltry < 3% of active OpenAI use as of their comments last month, though it’s an important sliver in that it’s likely the most accuracy dependent use cases.

How Llama 2 May address some of these concerns:

The main differentiators on Llama 2 is that it is both a family of models (with low-latency and high-accuracy versions similar to OpenAI/Anthropic offerings) and it has performance that is closing in on commercialized private models. While Llama 2 performance is not on par with GPT3.5/4 performance in most use cases. It’s getting close enough to give people hope that in specialized cases they can fine tune the model. Also of note, Llama 2 has a commercial license, meaning that developers can use it in their commercially available products.

Open Source models carry a lot of appeal in addressing the above issues:

Private Data:

Flexibility of deployment options and custom training allow for updates to the core model with private data.

Observable Inputs:

Open source models tend to include better transparency on the training data used given the removal of incentive to maintain a competitive advantage though model, though it’s worth noting that the open source model does not include direct access to training data.

Linear Pricing:

Open source models are both available through model infrastructure providers like Hugging Face and Together and to deploy on your own infrastructure which allows for consumers to switch from a pay-per-use model to more fixed cost model (there is still variable costs for model use) as use scales up.

Stable Performance:

Self deployment allows for users to control model updates and changes more completely than commercial models CURRENTLY allow. I expect this gap to lessen as API products mature, but will likely not fully close as maintaining many model versions carries cost for providers.

Generative Post produced by Gen AI Partners